Dark matter, neutrinos and drug discovery: how AI is powering SLAC science and technology

Check out the second of a two-part series exploring how artificial intelligence helps researchers from around the world perform cutting-edge science with the lab’s state-of-the-art facilities and instruments. Read part one here.

In this part you’ll learn how AI is playing a key role in helping SLAC researchers find new galaxies and tiny neutrinos, and discover new drugs.

By Carol Tseng

Taming big data and particle beams: how SLAC researchers are pushing AI to the edge

Check out the first of this two-part series exploring how artificial intelligence helps researchers from around the world perform cutting-edge science with the lab’s state-of-the-art facilities and instruments. In this part you’ll learn how SLAC researchers collaborate to develop AI tools to make molecular movies, speeding up the discovery process in the era of big data.

Each day, researchers across the Department of Energy’s (DOE’s) SLAC National Accelerator Laboratory and the world collaborate to answer fundamental questions on how the universe works – and invent powerful tools to aid that quest. Many of these new tools are based on machine learning, a type of artificial intelligence.

“AI has the potential not just to accelerate science, but also to change the way we do science at the lab,” said Daniel Ratner, a leading AI and machine learning scientist at SLAC.

Researchers use AI to help run more efficient and effective experiments at the lab’s X-ray facilities, the Stanford Synchrotron Radiation Lightsource (SSRL) and Linac Coherent Light Source (LCLS), and handle massive amounts of complex data from experiments such as that from the National Science Foundation (NSF)-DOE Vera C. Rubin Observatory, which the lab co-operates with NOIRLab. Machine learning is also becoming key to solving big questions and important problems facing our world – in drug discovery for improving human health, the search for better battery materials for sustainable energy, understanding our origins and the universe, and much more. On a national scale, SLAC is part of the DOE laboratory complex that is coming together around initiatives like Frontiers in Artificial Intelligence for Science, Security and Technology (FASST), which aims to tackle national and global challenges.

Unraveling the mysteries of the universe

Among SLAC's largest projects is the NSF-DOE Vera C. Rubin Observatory, for which SLAC built the Legacy Survey of Space and Time (LSST) Camera, the world's largest digital camera for astrophysics and cosmology. SLAC will jointly operate the project and its LSST, a ten-year survey aimed at understanding dark matter, dark energy and more. “The Rubin observatory is going to give us a whole new window on how the universe is evolving. In order to make sense of these new data, and use them to understand the nature of dark matter and dark energy, we will need new tools,” said Risa Wechsler, Stanford University humanities and sciences professor and professor of physics and of particle physics and astrophysics, and director of the Kavli Institute for Particle Astrophysics and Cosmology at SLAC and Stanford.

SLAC completes construction of the largest digital camera ever built for astronomy

Set in place atop a telescope in Chile, the 3,200-megapixel LSST Camera will help researchers better understand dark matter, dark energy and other mysteries of our universe.

Next year, Rubin will perform nightly surveys of the Southern Hemisphere sky and send around 20 terabytes of images each night to several data centers, including the U.S. Data Facility at SLAC. These images are so large that displaying one at full resolution would take over 375 4K high-definition TVs – and manually sifting through them to track billions of celestial objects and identify anything new, or anomalous, would be impossible.

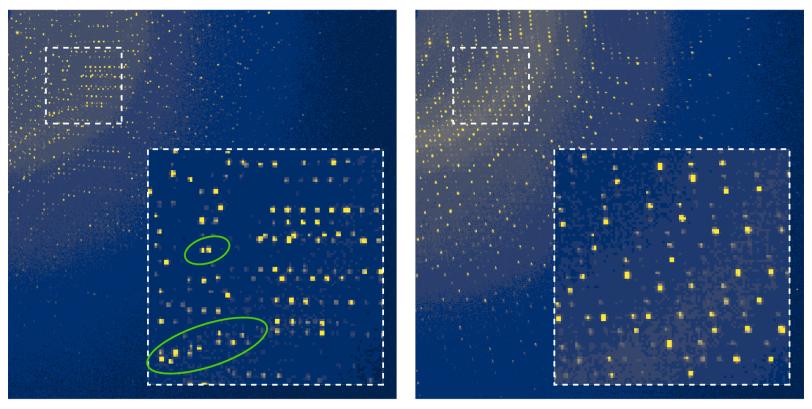

AI, on the other hand, is good at detecting anomalies. For example, machine learning can help more quickly determine if a change in brightness, one kind of anomaly, is due to an irrelevant artifact or something truly new, such as a supernova in a distant galaxy or a theorized but previously undetected astronomical object. “You’re trying to extract a subtle signal from a massive complex dataset that is only tractable with the help of AI/machine learning tools and techniques,” said Adam Bolton, SLAC scientist and lead of the Rubin U.S. Data Facility.

Machine learning can also aid in studying the smallest objects in the universe, such as neutrinos, considered the most abundant matter particle. The Deep Underground Neutrino Experiment (DUNE) will help scientists investigate the properties of neutrinos to answer fundamental questions about the origins and evolution of the universe, such as how and why the universe is dominated by matter. The DUNE near detector currently being constructed at Fermi National Accelerator Laboratory will capture images of thousands of neutrino interactions each day. Manually analyzing the millions of images expected from the near detector will be overwhelming, so work is underway to develop and train a machine learning neural network tool, an approach inspired by the way neurons work in human brains, to automatically analyze the images.

“Current manual and software methods will take months or years to process and analyze the millions of images,” said Kazuhiro Terao, SLAC staff scientist. “With AI, we are very excited about getting high quality results in just a week or two. This will speed up the physics discovery process.”

Transforming drug design and materials discovery

Research aided by AI could have more direct, real-world benefits as well. For instance, researchers working to discover or design new materials and drugs are faced with a daunting task of choosing from a pool of hundreds to millions of candidates. Machine learning tools, such as Bayesian algorithm execution, can power up the process by making suggestions on what step to take next or which protein or material to try, instead of researchers painstakingly trying one candidate after another.

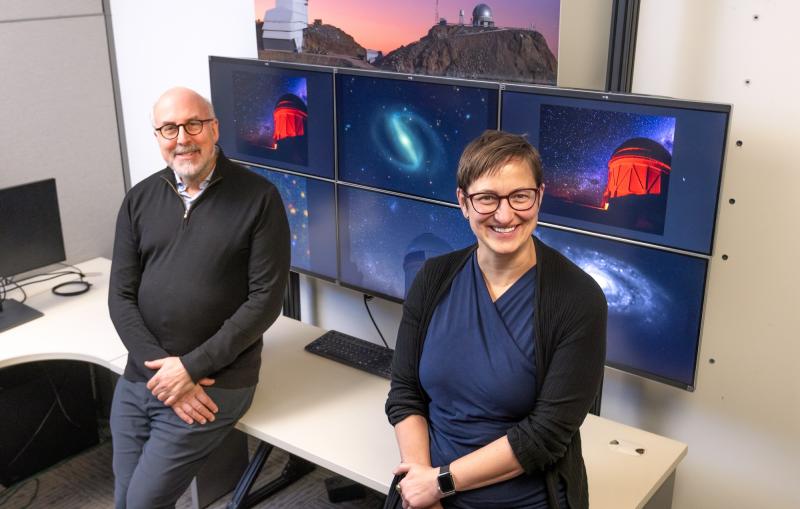

This is one of the goals of a DOE-funded BRaVE consortium led by SSRL scientists Derek Mendez and Aina Cohen, who co-directs SSRL’s Structural Molecular Biology Resource. The team is advancing U.S. biopreparedeness, in part by developing new AI tools that simplify time-consuming and complex steps involved in the structure-based drug design process. For example, AI tools on the SSRL’s Structural Molecular Biology beamlines are busy analyzing diffraction images, which help researchers understand the structure of biological molecules, how they function and how they interact with new drug-like compounds. These tools provide real-time information on data quality, such as the integrity of the protein crystals being studied. Ideally, single crystals are used for these experiments, but the crystals are not always well ordered. Sometimes, they break or stick together, potentially compromising data quality, and these problems are often not apparent until researchers have analyzed the collected data.

Overall, I am excited about finding ways that artificial and natural intelligence can work together to improve research quality.”

Bridging the Farm: AI for Science at SLAC and Stanford

SLAC co-hosted the workshop with Stanford University, aiming to foster collaborations between SLAC scientists and Stanford AI researchers in line with the U.S. Department of Energy's vision for AI applications.

These experiments produce hundreds to many thousands of diffraction patterns at high rates. Manually inspecting all that data to weed out defective crystal patterns will be nearly impossible, so researchers are turning to AI tools to automate this process. “We developed an AI model to assess the quality of diffraction pattern images 100 times faster than the process we used before,” said Mendez. “I like using AI for simplifying time-consuming tasks. That can really help free up time for researchers to explore other, more interesting aspects of their research. Overall, I am excited about finding ways that artificial and natural intelligence can work together to improve research quality.”

Researchers at LCLS are now using these tools, said Cohen – and, Mendez said, there’s a growing interplay between the two. “LCLS is working on tools for diffraction analysis that we are interested in applying at SSRL,” added Mendez. “We are building that synergy.”

Frédéric Poitevin, a staff scientist at LCLS, agrees. “Working together is key to facing the unique challenges at both facilities.”

Among the many AI tools Poitevin’s team is developing is one that speeds up the analysis of complex diffraction images that help researchers visualize the structure and behavior of biological molecules in action. Extracting this information involves considering subtle variations in the intensity of millions of pixels across several hundred thousand images. Kevin Dalton, a staff scientist on Poitevin’s team, trained an AI model that can analyze the large volume of data and search for important faint signals much more quickly and accurately than traditional methods.

“With the AI models that Kevin is developing, we have the potential at LCLS and SSRL to open a completely new window into the molecular structure and behavior we have been after but have never been able to see with traditional approaches,” said Poitevin.

SLAC completes construction of the largest digital camera ever built for astronomy

“By also leveraging our partnership with AI experts at Stanford University, we work together to deepen our AI knowledge and build AI tools that enable discovery and innovative technology for exploring science at the biggest, smallest and fastest scales.” – Daniel Ratner

Opening doors for discovery and innovation

Beyond specific AI projects, Wechsler said, the growing importance and utility of AI in many science areas is opening more doors for collaboration among SLAC scientists, engineers and students. “I am excited about the SLAC AI community developing,” she said. “We have more to do and learn from each other across disciplines. We have many commonalities in what we want to accomplish in astronomy, particle physics and other areas of science at the lab, so there is a lot of potential.”

The increasing opportunities for collaboration are driving teams across SLAC to identify where AI tools are needed and develop workflows that can be applied across the lab. This effort is an important part of the lab’s overall strategy in harnessing AI and computing power for advancing science today and into the future.

Adds Ratner, “By also leveraging our partnership with AI experts at Stanford University, we work together to deepen our AI knowledge and build AI tools that enable discovery and innovative technology for exploring science at the biggest, smallest and fastest scales.”

The research was supported by the DOE Office of Science. LCLS and SSRL are DOE Office of Science user facilities.

For questions or comments, contact SLAC Strategic Communications & External Affairs at communications@slac.stanford.edu.

About SLAC

SLAC National Accelerator Laboratory explores how the universe works at the biggest, smallest and fastest scales and invents powerful tools used by researchers around the globe. As world leaders in ultrafast science and bold explorers of the physics of the universe, we forge new ground in understanding our origins and building a healthier and more sustainable future. Our discovery and innovation help develop new materials and chemical processes and open unprecedented views of the cosmos and life’s most delicate machinery. Building on more than 60 years of visionary research, we help shape the future by advancing areas such as quantum technology, scientific computing and the development of next-generation accelerators.

SLAC is operated by Stanford University for the U.S. Department of Energy’s Office of Science. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time.